Paradigms and Priors

Scott Alexander at Slate Star Codex has been blogging lately about Thomas Kuhn and the idea of paradigm shifts in science. This is a topic near and dear to my heart, so I wanted to take the opportunity to share some of my thoughts and answer some questions that Scott asked in his posts.

The Big Idea

I’m going to start with my own rough summary of what I take from Kuhn’s work. But since this is all in response to Scott’s book review of The Structure of Scientific Revolutions, you may want to read his post first.

The main idea I draw from Kuhn’s work is that science and knowledge aren’t only, or even primarily, of a collection of facts. Observing the world and incorporating evidence is important to learning about the world, but evidence can’t really be interpreted or used without a prior framework or model through which to interpret it. For example, check out this Twitter thread: researchers were able to draw thousands of different and often mutually contradictory conclusions from a single data set by varying the theoretical assumptions they used to analyze it.

Kuhn also provided a response to Popperian falsificationism. No theory can ever truly be falsified by observation, because you can force almost any observation to match most theories with enough special cases and extra rules added in. And it’s often quite difficult to tell whether a given extra rule is an important development in scientific knowledge, or merely motivated reasoning to protect a familiar theory. After all, if you claim that objects with different weights fall at the same speed, you then have to explain why that doesn’t apply to bowling balls and feathers.

This is often described as the theory-ladenness of observation. Even when we think directly perceiving things, those perceptions are always mediated by our theories of how the world works and can’t be fully separated from them. This is most obvious when engaging in a complicated indirect experiment: there’s a lot of work going on between “I’m hearing a clicking sound from this thing I’m holding in my hand” and “a bunch of atoms just ejected alpha particles from their nuclei”.

But even in more straightforward scenarios, any inference comes with a lot of theory behind it. I drop two things that weigh different amounts, and see that the heavier one falls faster—proof that Galileo was wrong!

Or even more mundanely: I look through my window when I wake up, see a puddle, and conclude that it rained overnight. Of course I’m relying on the assumption that when I look through my window I actually see what’s on the other side of it, and not, say, a clever science-fiction style holoscreen. But more importantly, my conclusion that it rained depends on a lot of assumptions I normally wouldn’t explicitly mention—that rain would leave a puddle, and that my patio would be dry if it hadn’t rained.

(In fact, I discovered several months after moving in that my air conditioner condensation tray overflows on hot days. So the presence of puddles doesn’t actually tell me that it rained overnight).

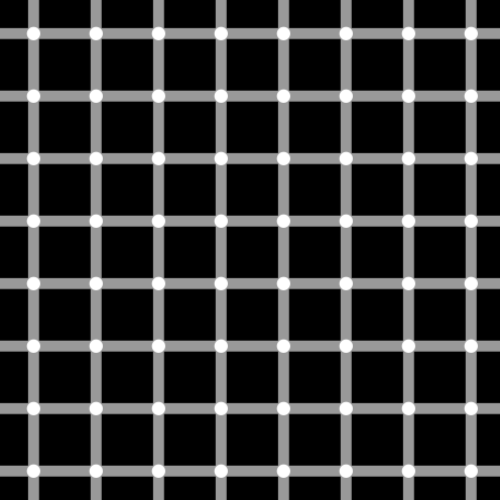

Even direct perception, what we can see right in front of us, is mediated by internal modeling our brains do to put our observations into some comprehensible context. This is why optical illusions work so well; they hijack the modeling assumptions of your perceptual system to make you “see” things that aren’t there.

There are no black dots in this picture.

Who are you going to believe: me, or your own eyes?

What does this tell us about science?

Kuhn divides scientific practice into three categories. The first he calls pre-science, where there is no generally accepted model to interpret observations. Most of life falls into this category—which makes sense, because most of life isn’t “science”. Subjects like history and psychology with multiple competing “schools” of thought are pre-scientific, because while there are a number of useful and informative models that we can use to understand parts of the subject, no single model provides a coherent shared context for all of our evidence. There is no unifying consensus perspective that basically explains everything we know.

A model that does achieve such a coherent consensus is called a paradigm. A paradigm is a theory that explains all the known evidence in a reasonable and satisfactory way. When there is a consensus paradigm, Kuhn says that we have “normal science”. And in normal science, the idea that scientists are just collecting more facts actually makes sense. Everyone is using the same underlying theory, so no one needs to spend time arguing about it; the work of science is just to collect more data to interpret within that theory.

But sometimes during the course of normal science you find anomalies, evidence that your paradigm can’t readily explain. If you have one or two anomalies, the best response is to assume that they really are anomalies—there’s something weird going on there, but it isn’t a problem for the paradigm.

A great example of an unimportant anomaly is the OPERA experiment from a few years ago that measured neutrinos traveling faster than the speed of light. This meant one of two things: either special relativity, a key component of the modern physics paradigm, was wrong; or there was an error somewhere in a delicate measurement process. Pretty much everyone assumed that the measurement was flawed, and pretty much everyone was right.

In contrast, sometimes the anomalies aren’t so easy to resolve. Scientists find more and more anomalies, more results that the dominant paradigm can’t explain. It becomes clear the paradigm is flawed, and can’t provide a satisfying explanation for the evidence. At this point people start experimenting with other models, and with luck, eventually find something new and different that explains all the evidence, old and new, normal and anomalous. A new paradigm takes over, and normal science returns.

(Notice that the old paradigm was never falsified, since you can always add epicycles to make the new data fit. In fact, the proverbial “epicycles” were added to the Ptolemaic model of the solar system to make it fit astronomical observations. In the early days of the Copernican model, it actually fit the evidence worse than the Ptolemaic model did—but it didn’t require the convoluted epicycles that made the Ptolemaic model work. Sabine Hossenfelder describes this process as, not falsification, but “implausification”: “a continuously adapted theory becomes increasingly difficult and arcane—not to say ugly—and eventually practitioners lose interest.”)

Importantly, Kuhn argued that two different paradigms would be incommensurable, so different from each other that communication between them is effectively impossible. I think this is sometimes overblown, but also often underestimated. Imagine trying to explain a modern medical diagnosis to someone who believes in four humors theory. Or remember how difficult it is to have conversations with someone whose politics are very different from your own; the background assumptions about how the world works are sometimes so different that it’s hard to agree even on basic facts.1

Scott’s example questions

Now I can turn to the very good questions Scott asks in section II of his book review.

For example, consider three scientific papers I’ve looked at on this blog recently….What paradigm is each of these working from?

As a preliminary note, if we’re maintaining the Kuhnian distinction between a paradigm on the one hand and a model or a school of thought on the other, it is plausible that none of these are working in true paradigms. One major difficulty in many fields, especially the social sciences is that there isn’t a paradigm that unifies all our disparate strands of knowledge. But asking what possibly-incommensurable model or theory these papers are working from is still a useful and informative exercise.

I’m going to discuss the first study Scott mentions in a fair amount of depth, because it turned out I had a lot to say about it. I’ll follow that up by making briefer comments on his other two examples.

Cipriani, Ioannidis, et al.

– Cipriani, Ioannidis, et al perform a meta-analysis of antidepressant effect sizes and find that although almost all of them seem to work, amitriptyline works best.

This is actually a great example of some of the ways paradigms and models shape science. The study is a meta-analysis of various antidepressants to assess their effectiveness. So what’s the underlying model here?

Probably the best answer is: “depression is a real thing that can be caused or alleviated by chemicals”. Think about how completely incoherent this entire study would seem to a Szasian who thinks that mental illnesses are just choices made by people with weird preferences, to a medieval farmer who thinks mental illnesses are caused by demonic possession, or to a natural-health advocate who thinks that “chemicals” are bad for you. The medical model of mental illness is powerful and influential enough that we often don’t even notice we’re relying on it, or that there are alternatives. But it’s not the only model that we could use.2

While this is the best answer Scott’s question, it’s not the only one. When Scott originally wrote about this study he compared it to one he had done himself, which got very different results. Since they’re (mostly) studying the same drugs, in the same world, they “should” get similar results. But they don’t. Why not?

I’m not in any position to actually answer that question, since I don’t know much about psychiatric medications. But I can point out one very plausible reason: the studies made different modeling assumptions. And Scott highlights some of these assumptions himself in his analysis. For instance, he looks at the way Cipriani et al. control for possible bias in studies:

I’m actually a little concerned about the exact way he did this. If a pharma company sponsored a trial, he called the pharma company’s drug’s results biased, and the comparison drugs unbiased….

But surely if Lundbeck wants to make Celexa look good [relative to clomipramine], they can either finagle the Celexa numbers upward, finagle the clomipramine numbers downward, or both. If you flag Celexa as high risk of being finagled upwards, but don’t flag clomipramine as at risk of being finagled downwards, I worry you’re likely to understate clomipramine’s case.

I make a big deal of this because about a dozen of the twenty clomipramine studies included in the analysis were very obviously pharma companies using clomipramine as the comparison for their own drug that they wanted to make look good; I suspect some of the non-obvious ones were too. If all of these are marked as “no risk of bias against clomipramine”, we’re going to have clomipramine come out looking pretty bad.

Cipriani et al. had a model for which studies were producing reliable data, and fed it into their meta-analysis. Notice they aren’t denying or ignoring the numbers that were reported, but they are interpreting them differently based on background assumptions they have about the way studies work. And Scott is disagreeing with those assumptions and suggesting a different set of assumptions instead.

(For bonus points, look at why Scott flags this specific case. Cipriani et al. rated clomipramine badly, but Scott’s experience is that clomipramine is quite good. This is one of Kuhn’s paradigm-violating anomalies: the model says you should expect one result, but you observe another. Sometimes this causes you to question the observation; sometimes a drug that “everyone knows” is great actually doesn’t do very much. But sometimes it causes you to question the model instead.)

Scott’s model here isn’t really incommensurable with Cipriani et al.’s in a deep sense. But the difference in models does make numbers incommensurable. An odds ratio of 1.5 means something very different if your model expects it to be biased downwards than it does if you expect it to be neutral—or biased upwards. You can’t escape this sort of assumption just by “looking at the numbers”.

And this is true even though Scott and Cipriani et al. are largely working with the same sorts of models. They both believe in the medical model of mental illness. Their paradigm does include the idea that randomized controlled trials work, as Scott suggests in his piece. A bit more subtly, their shared paradigm also includes whatever instruments they use to measure antidepressant effectiveness. Since Cipriani et al. is actually a meta-analysis, they don’t address this directly. But each study they include is probably using some sort of questionnaire to assess how depressed people are. The numbers they get are only coherent or meaningful at all if you think that questionnaire is measuring something you care about.

There’s one more paradigm choice here that I want to draw attention to, because it’s important, and because I know Scott is interested in it, and because we may be in the middle of a moderate paradigm shift right now.

Studies this one tend to assume that a given drug will work about the same for everyone. And then people find that no antidepressant works consistently for everyone, and they all have small effect sizes, and conclude that maybe antidepressants aren’t very useful. But that’s hard to square with the fact that people regularly report massive benefits from going on antidepressants. We found an anomaly!

A number of researchers, including Scott himself, have suggested that any given person will respond well to some antidepressants and poorly to others. So when a study says that bupropion (or whatever) has a small effect on average, maybe that doesn’t mean bupropion isn’t helping anyone. Maybe instead it’s helping some people quite a lot, and it’s completely useless for other people, and so on average its effect is small but positive.

But this is a completely different way of thinking clinically and scientifically about these drugs. And it potentially undermines the entire idea behind meta-analyses like Cipriani et al. If our data is useless because we’re doing too much averaging, then averaging all our averages together isn’t really going to help. Maybe we should be doing something entirely different. We just need to figure out what.

Ceballos, Ehrlich et al.

– Ceballos, Ehrlich, et al calculate whether more species have become extinct recently than would be expected based on historical background rates; after finding almost 500 extinctions since 1900, they conclude they definitely have.

I actually think Scott mostly answers his own questions here.

As for the extinction paper, surely it can be attributed to some chain of thought starting with Cuvier’s catastrophism, passing through Lyell, and continuing on to the current day, based on the idea that the world has changed dramatically over its history and new species can arise and old ones disappear. But is that “the” paradigm of biology, or ecology, or whatever field Ceballos and Lyell are working in? Doesn’t it also depend on the idea of species, a different paradigm starting with Linnaeus and developed by zoologists over the ensuing centuries? It look like it dips into a bunch of different paradigms, but is not wholly within any.

The paper is using a model where

- Species is a real and important distinction;

- Species extinction is a thing that happens and matters;

- Their calculated background rate for extinction is the relevant comparison.

(You can in fact see a lot of their model/paradigm come through pretty clearly in the “Discussion” section of the paper— which is good writing practice.)

Scott seems concerned that it might dip a whole bunch of paradigms, but I don’t think that’s really a problem. Any true unifying paradigm will include more than one big idea; on the other hand, if there isn’t a true paradigm, you’d expect research to sometimes dip into multiple models or schools of thought. My impression is that biology is closer to having a real paradigm than not, but I can’t say for sure.

Terrell et al.

– Terrell et al examine contributions to open source projects and find that men are more likely to be accepted than women when adjusted for some measure of competence they believe is appropriate, suggesting a gender bias.

Social science tends to be less paradigm-y than the physical sciences, and this sort of politically-charged sociological question is probably the least paradigm-y of all, in that there’s no well-developed overarching framework that can be used to explain and understand data. If you can look at a study and know that people will immediately start arguing about what it “really means”, there’s probably no paradigm.

There is, however, a model underlying any study like this, as there is for any sort of research. Here I’d summarize it something like:

- Gender is an interesting and important construct;

- Acceptance rates for pull requests are a measure of (perceived) code quality;

- Their program that evaluated “obvious gender cues” does a good job of evaluating gender as perceived by other GitHub users;

- The “insider versus outsider” measure they report is important;

- The confounders they check are important, and the confounders they don’t check aren’t.

Basically, any time you get to do some comparisons and not others, or report some numbers and not others, you have to fall back on a model or paradigm to tell you which comparisons are actually important. Without some guiding model, you’d just have to report every number you measured in a giant table.

Now, sometimes people actually do this. They measure a whole bunch of data, and then they try to correlate everything with everything else, and see what pops up. This is not usually good research practice.

If you had exactly this same paper except, instead of “men and women” it covered “blondes and brunettes”, you’d probably be able to communicate the content of the paper to other people; but they’d probably look at you kind of funny, because why would that possibly matter?

Anomalies and Bayes

Possibly the most interesting thing Scott has posted is his Grand Unified Chart relating Kuhnian theories to related ideas in other disciplines. The chart takes the Kuhnian ideas of “paradigm”, “data”, and “anomaly” and identifies equivalents from other fields. (I’ve flipped the order of the second and third columns here). In political discourse Scott relates them to “ideology”, “facts”, and “cognitive dissonance”; in psychology he relates them to “prediction”, “sense data”, and “surprisal”.

In the original version of the chart, several entries in the “anomalies” column were left blank. He has since filled some of them in, and removed a couple of other rows. I think his answer for the “Bayesian probability” row is wrong; but I think it’s interestingly wrong, in a way that effectively illuminates some of the philosophical and practical issues with Bayesian reasoning.

A quick informal refresher: in Bayesian inference, we start with some prior probability that describes what we originally believe the world is like, by specifying the probabilities of various things happening. Then we make observations of the world, and update our beliefs, giving our conclusion as a posterior probability.

The rule we use to update our beliefs is called Bayes’s Theorem (hence the name “Bayesian inference”). Specifically, we use the mathematical formula \[ P(H |E) = \frac{ P(E|H) P(H)}{P(E)}, \] where $P$ is the probability function, $H$ is some hypothesis, and $E$ is our new evidence.

I have often drawn the same comparison Scott draws between a Kuhnian paradigm and a Bayesian prior. (They’re not exactly the same, and I’ll come back to this in a bit). And certainly Kuhnian “data” and Bayesian “evidence” correspond pretty well. But the Bayesian equivalent of the Kuhnian anomaly isn’t really the KL-divergence that Scott suggests.

KL-divergence is mathematical way to measure how far apart two probability distributions are. So it’s an appropriate way to look at two priors and tell how different they are. But you never directly observe a probability distribution—just a collection of data points—so KL-divergence doesn’t tell you how surprising your data is. (Your prior does that on its own).

But “surprising evidence” isn’t the same thing as an anomaly. If you make a new observation that was likely under your prior, you get an updated posterior probability and everything is fine. And if you make a new observation that was unlikely under your prior, you get an updated posterior probability and everything is fine. As long as the true3 hypothesis is in your prior at all, you’ll converge to it with enough evidence; that’s one of the great strengths of Bayesian inference. So even a very surprising observation doesn’t force you to rethink your model.

In contrast, if you make a new observation that was impossible under your prior, you hit a literal divide-by-zero error. If your prior says that $E$ can’t happen, then you can’t actually carry out the Bayesian update calculation, because Bayes’s rule tells you to divide by $P(E)$—which is zero. And this is the Bayesian equivalent of a Kuhnian anomaly.

We can imagine a robot in an Asimov short story encountering this situation, trying to divide by zero, and crashing fatally. But people aren’t quite so easy to crash, and an intelligently designed AI wouldn’t be either. We can do something that a simple Bayesian inference algorithm doesn’t allow: we can invent a new prior and start over from the beginning. We can shift paradigms.

A theoretically perfect Bayesian inference algorithm would start with a universal prior—a prior that gives positive probability to every conceivable hypothesis and every describable piece of evidence. No observation would ever be impossible under the universal prior, so no update would require division by zero.

But it’s easier to talk about such a prior than it is to actually come up with one. The usual example I hear is the Solomonoff prior, but it is known to be uncomputable. I would guess that any useful universal prior would be similarly uncomputable. But even if I’m wrong and a theoretically computable universal prior exists, there’s definitely no way we could actually carry out the infinitely many computations it would require.

Any practical use of Bayesian inference, or really any sort of analysis, has to restrict itself to considering only a few classes of hypotheses. And that means that sometimes, the “true” hypothesis won’t be in your prior. Your prior gives it a zero probability. And that means that as you run more experiments and collect more evidence, your results will look weirder and weirder. Eventually you might get one of those zero-probability results, those anomalies. And then you have to start over.

A lot of the work of science—the “normal” work—is accumulating more evidence and feeding it to the (metaphorical) Bayesian machine. But the most difficult and creative part is coming up with better hypotheses to include in the prior. Once the “true” hypothesis is in your prior, collecting more evidence will drive its probability up. But you need to add the hypothesis to your prior first. And that’s what a paradigm shift looks like.

It’s important to remember that this is an analogy; a paradigm isn’t exactly the same thing as a prior. Just as “surprising evidence” isn’t an anomaly, two priors with slightly different probabilities put on some hypotheses aren’t operating in different paradigms.

Instead, a paradigm comes before your prior. Your paradigm tells you what counts as a hypothesis, what you should include in your prior and what you should leave out. You can have two different priors in the same paradigm; you can’t have the same prior in two different paradigms. Which is kind of what it means to say that different paradigms are incommensurable.

This is probably the biggest weakness of Bayesian inference, in practice. Bayes gives you a systematic way of evaluating the hypotheses you have based on the evidence you see. But it doesn’t help you figure out what sort of hypotheses you should be considering in the first place; you need some theoretical foundation to do that.

You need a paradigm.

Have questions about philosophy of science? Questions about Bayesian inference? Want to tell me I got Kuhn completely wrong? Tweet me @ProfJayDaigle or leave a comment below, and let me know!

-

If you’re interested in the political angle on this more than the scientific, check out the talk I gave at TedxOccidentalCollege last year. ↵Return to Post

-

In fact, this was my third or fourth answer in the first draft of this section. Then I looked at it again and realized it was by far the best answer. That’s how paradigms work: as long as everything is working normally, you don’t even have to think about the fact that they’re there. ↵Return to Post

-

"True" isn’t really the most accurate word to use here, but it works well enough and I want to avoid another thousand-word digression on the subject of metaphysics. ↵Return to Post

Tags: math models bayesianism probability kuhn philosophy of science epistemology

Support my writing at Ko-Fi

Support my writing at Ko-Fi