Pascal's Wager, Medicine, and the Limits of Formal Reasoning

Scott Alexander at Astral Codex Ten has a good post recently thinking about what he calls Pascalian Medicine. As always the entire post is worth reading, but here’s an excerpt:

Another way of looking at this is that I must think there’s a 25% chance Vitamin D works, and a 10% chance ivermectin does. Both substances are generally safe with few side effects. So (as many commenters brought up) there’s a Pascal’s Wager like argument that someone with COVID should take both. The downside is some mild inconvenience and cost (both drugs together probably cost $20 for a week-long course). The upside is a well-below-50% but still pretty substantial probability that they could save my life.

…

But why stop there? Sure, take twenty untested chemicals for COVID. But there are almost as many poorly-tested supplements that purport to treat depression. The cold! The flu! Diabetes! Some of these have known side effects, but others are about as safe as we can ever prove anything to be. Maybe we should be taking twenty untested supplements for every condition!

Scott doesn’t seem to believe we should do this, but is trying to figure out the actual flaw in this reasoning. The most convincing argument he comes up with is based in how unreliable modern medical studies are, and how easy it is to generate spurious positive results.

I think ivermectin doesn’t work. I think that it looks like it works, because it has lots of positive studies and a few big-name endorsements. But our current scientific method is so weak and error-prone that any chemical which gets raised to researchers’ attentions and studied in depth will get approximately this amount of positive results and buzz. Look through the thirty different chemicals featured on the sidebar of the ivmmeta site if you don’t believe me.

…

Probably what I’m doing wrong here is saying that ivermectin having some decent studies raises its probability of working to 5%. I should just say 0.1% or 0.01% or whatever my prior on a randomly-selected medication treating a randomly-selected disease is (higher than you’d think, based on the argument from antibiotics).

From the Outside View, this argument seems strong. From the Inside View, I have a lot of trouble looking at a bunch of studies apparently supporting a thing, and no contrary evidence against the thing besides my own skepticism, and saying there’s a less than 1% chance that thing is true.

The Outside View argument here is completely right, and is a great illustration of the limitations of Bayesian reasoning that I talked about here and here.

Unknown Unknowns

The basic argument for Pascalian medicine goes: okay, suppose ivermectin has a 10% chance of reducing covid mortality by 10%. About a thousand people are dying of covid every week day1 in the US according to the CDC weekly tracker, so the expected benefit of giving all our covid patients ivermectin is something like saving ten lives per day.2

Even if you think the probability ivermectin works is only something like 1%, that still adds up to one life saved per day. Since ivermectin is cheap, and “generally safe with few side effects”, an expected value of “saves one life per day” looks pretty good! So maybe we should prescribe it out of an abundance of caution.3

And then we make the same argument about, apparently, twenty other drugs, and we’re taking a crazy drug cocktail. (Scott calls this the Insanity Wolf position.) So it looks like something has gone wrong. But what?

We made a basic, common error that really isn’t fully avoidable: we took a bunch of stuff we can’t measure, and decided it didn’t matter. “Generally safe with few side effects” isn’t the same as “perfectly safe”, and “cheap” isn’t the same as “free”. And something like ninety thousand people get covid in the US every day; to save that one life we’re probably giving drugs to tens of thousands of people. How confident are we that our drugs won’t hurt any of them? Especially if we give an Insanity Wolf-style twenty-drug cocktail?

Scott discusses this idea, of course. But I think he seriously underestimates the problem of unknown unknowns here. For well-understood drugs with large probable benefits, the unknown unknowns don’t matter very much. But for long-shot possible payoffs, like with ivermectin, unknown unknowns present a real, unavoidable problem. And the theoretically, mathematically correct response is to throw up our hands and take the Outside View instead.

Three Example Drugs

I want to take a look at three different drugs and do some illustrative calculations for the possible risks and benefits.

Paxlovid

There are always unknown unknowns, but in many cases we can put bounds on how good, or bad, things can be. Paxlovid, Pfizer’s new antiviral pill, provides a good example of this reasoning. In trials, Paxlovid cut covid hospitalizations and deaths by about 90%.4 Let’s assume that’s a wildly optimistic overestimate, and give it a 50% chance of cutting deaths by 50%. Then in expectation that’s going to save a couple hundred lives each day.

What are the risks? This is a new drug so it’s hard to know what they are; all we know is that (1) Pfizer didn’t expect the side effects to be too bad, based on prior knowledge of this drug class, and (2) they didn’t notice anything too dramatic in the trial they ran. That doesn’t tell us how bad the side effects are, but it does put limits on them: if Paxlovid killed 1% of the people who took it, we’d know.

But suppose Paxlovid kills .1% of everyone who takes it. That’s about as high as it could go without us probably having noticed already, since the trial administered it to about 600 people and none of them died. (And realistically if it killed .1% of people, way more than that would have severe side effects and we probably would have noticed.) If we give Paxlovid to everyone in the US who gets covid, that’s about 90,000 people a day, and Paxlovid would kill 90 people a day. And that’s less than the couple hundred lives it would save.

Now, all of these numbers are extremely handwavy. But I chose them to make Paxlovid looks as bad as reasonably possible, and it still comes out looking pretty good. My estimate of the benefit of Paxlovid was a huge lowball; it’s probably going to save closer to 800 lives in a day than 200 if we manage to give it to everybody. And on the other hand, I’d be shocked if it’s anywhere near as dangerous as I assumed in the last paragraph. Sure, there’s some minuscule chance that it it’s really really dangerous but only several years after you take it, but since that’s not how these drugs usually work we can round that off to zero.

The benefit of Paxlovid is large enough that it outweighs any vaguely reasonable estimate of the costs. And we don’t need any especially fancy calculations to see that.

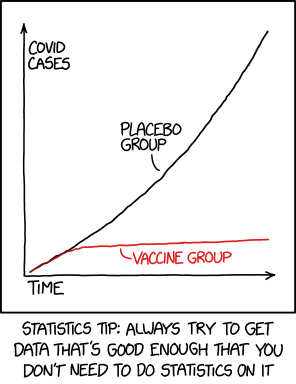

We could make basically the same argument about vaccines, except the worst plausible numbers look even better than for Paxlovid.

Tylenol

We can run a similar analysis with common every-day drugs like Tylenol. Scott observes that “We don’t fret over the unknown unknowns of Benadryl or Tylenol or whatever, even though we know their benefits are minor.” But by the same token, we also are reasonably confident that the unknown unknown costs of those drugs are minor. If Tylenol killed .1% of patients who took it, or even .01%, we would know. (And in fact we know Tylenol can cause liver damage, and that is a thing we very much do fret over.) Sure, unknown harms always could exist. But in this case we can be pretty confident that they have to be really small.

Apparently a new potentially deadly side effect of Tylenol was discovered in 2013. If I’m reading the FDA report correctly, they belive that one person has died from this side effect since 1969. That’s the scale of side effect that can slip under the radar for a drug as widely taken and studied as Tylenol.

Tylenol could have unknown unknowns, but they won’t be very unknown.

Back to Ivermectin

Now compare this with the ivermectin situation. Let’s suppose we give ivermectin a 10% chance of being effective, with a benefit of reducing deaths by 20%. (The Together trial has a non-significant effect of about 10%, so let’s double that.) Then in expectation we’re saving like 2% of lives a day, which is 20 lives saved if we give it to everyone.

How many people would ivermectin have to kill to net out negative? If we give it to 90,000 people every day, then 20 is about .02%. So does ivermectin kill about .02% of the people who take it? My guess is, probably not. But that seems a lot more within the realm of “maybe, it’s hard to be sure”.

We also reach the point where a lot of our ass-pull assumptions start to really matter. We said “maybe ivermectin has a 10% chance of working”. Scott’s the expert, not me, but that seems high to me. (Do you really think that one in ten drugs that have vague but mildly-promising data in preliminary trials pan out?) If we say ivermectin has a 1% chance of reducing deaths by 20%, then our expected value is two lives per day.

This could still pencil out as a good trade, but with benefits so small (and uncertain) it could easily not be worth it. Especially if we account for the guaranteed annoyance of taking a pill and the common minor side effects we know ivermectin has.

The Problem with Made-Up Numbers

But the larger point here is that all this math is bullshit. Are the odds of ivermectin working 10%? 1%? .01%? Where did that number come from? What do we mean by “working”—is it a 5% improvement? A 50% improvement?5 And at the same time, I don’t have real odds for “negative side effects”, which covers a lot of ground. (Scott himself points out that the odds of ivermectin unexpectedly killing you are definitely not zero.) And all this is the simple version of the calculation, where we don’t try to weigh things like “fever from covid might last one day less?” versus “ivermectin can cause fever?”

Scott argued many years ago that if it’s worth doing, it’s worth doing with made-up statistics. And I don’t really disagree with that essay. Doing experimental calculations with made-up numbers can give us information, and I certainly think the analysis of Paxlovid that I did above tells us something useful. But to learn anything from these calculations, we need our made-up numbers to at least vaguely reflect reality.

Scott wrote:

Remember the Bayes mammogram problem? The correct answer is 7.8%; most doctors (and others) intuitively feel like the answer should be about 80%. So doctors – who are specifically trained in having good intuitive judgment about diseases – are wrong by an order of magnitude….But suppose some doctor’s internet is down (you have NO IDEA how much doctors secretly rely on the Internet) and she can’t remember the prevalence of breast cancer. If the doctor thinks her guess will be off by less than an order of magnitude, then making up a number and plugging it into Bayes will be more accurate than just using a gut feeling about how likely the test is to work.

And this is right, but the caveat at the end is critical. If you have a good estimate of the prevalence of breast cancer, and a bad estimate of the chance of a false positive, then you can use the first number to get a better estimate of the second. But if you have a really good idea of the false positive rate (maybe you’ve seen thousands of positive results and learned which ones turned out to be false positives), but a shaky idea of the prevalence of breast cancer (hell, I have no idea how likely some lump is to be cancerous), you’ll be better off going with your intuition for how accurate the test is—and using that to estimate breast cancer prevalence!

Scott says that “varying the value of the “unknown unknowns” term until it says whatever justifies our pre-existing intuitions is the coward’s way out.” And this is one of the rare cases where I think he’s completely, unequivocally wrong. This isn’t the coward’s way out; it’s the only thing we can possibly do.

Reflective Equilibrium

If you find a convincing argument that generates an unlikely conclusion, you can accept the unlikely conclusion, you can decide that the premises of the argument were flawed, or you can decide the argument itself doesn’t work. If I collect some data, do some statistics, and calculate that taking Tylenol will cut my lifespan by thirty years, I don’t immediately throw away all my Tylenol—I look for where I screwed up my math. And that’s the correct, and rational, response.

If you think A is true and B is false, and find an argument that A implies B, you have three choices: you can decide A is false after all; you can decide B is true after all; or you can decide that the argument actually isn’t valid. Or you can adopt some probabilistic combination: it’s perfectly consistent to believe A is 60% likely to be true, B 60% likely to be false, and the argument 60% likely to be correct. But fundamentally you have to make a choice about which of the three pieces to adjust, and by how much.6

In the case of ivermectin, we have some data from some studies. We have an Inside View argument that, based on expected values computed from that data, taking ivermectin is probably worth it. And we have the Outside View argument that taking random long-shot drugs is not a great idea. And we have to reconcile these somehow.

First, we could reject, or disbelieve, the data. And we totally did that: a bunch of ivermectin studies are fraudulent or incompetent, and Scott argues pretty convincingly that some of the honest, competent studies are really picking up the benefits of killing off intestinal parasites. But even after doing that, we’re left with the Pascalian argument: ivermectin probably doesn’t work, but it might, and the costs of taking it are low, so we might as well. Do we listen to that argument, or to our gut belief that this can’t be a good idea?

A common trap that smart, math-oriented people fall into is thinking that the argument with numbers and calculations must be the better one. The Inside View argument did some math, and multiplied some percentages, and came up with an expected value; the Outside View argument comes from a fuzzy intuitive sense that medicine Doesn’t Work That Way. So the mathy argument should win out.

But in this case, we were doing calculations with numbers that were, you might remember, completely made up. Sure, the Outside View argument reflects a fuzzy intuitive sense of whether a random potential cure is likely to help us. The Inside View argument, on the other hand, reflects a fuzzy intuitive sense of whether Ivermectin is likely to protect us from covid.

The only real difference is that we took the second fuzzy intuition, put a fuzzy number on it, and plugged it into some cost-benefit analysis formulas. And no matter what fancy formulas we use, they can never make our starting numbers less fuzzy. Given the choice between a fuzzy intuition, and an equally fuzzy intuition that we’ve done math to, I’m inclined to trust the first one. With fewer steps, there are fewer ways to screw up.

Finding the Error

At this point I think we’ve reached roughly Scott’s position at the end of his essay. The Outside View argument is winning out in practice, but we haven’t articulated any specific problems with the Inside View argument. And this is uncomfortable, because they can’t both be right. We can say it’s more likely we screwed up the more complicated, mathier argument. But how did we screw it up?

And on reflection, the answer is that we’re confusing two different arguments. I think that “Sure, go ahead and take ivermectin, it probably won’t help but it might, and it probably won’t hurt either” is a pretty reasonable position, and was even more reasonable six months ago, when we knew less than we do now.7

I know a bunch of people who take Vitamin C, even though it’s not clear that accomplishes anything. I myself flip-flop between taking a multivitamin because it seems like it might make me healthier, and not-taking a multivitamin because there’s no real evidence that it does. Taking Ivermectin it case it’s helpful doesn’t really seem that different.

No, the crazy position is when we go full Insanity Wolf and take twenty different long-shot cures at once. That was the conclusion that seemed like it couldn’t possibly hold up, at least for me. And that’s also the point where it really does seem like the unknown unknowns start piling up. There are twenty different drugs that could all possibly cause negative side effects. There are 190 potential two-drug interactions and over a thousand potential three-drug interactions, and even if interactions are, in Scott’s words, “rarer than laypeople think”, that seems like a lot of room for something weird to happen.

So this is how we screwed up. We said these drugs are cheap and generally safe. But in order to make our math reasonable, we rounded “generally safe” down to “safe”, and ignored the risks entirely. As long as the risks are small enough, that works fine; but at some point we cross the threshold we can’t just ignore all the downsides when doing our calculations.

Is taking twenty drugs over that threshold? I don’t know, but it seems likely. Taking that many drugs probably won’t hurt you, but it might! And it will definitely be expensive and annoying, and a lot of those drugs have common mild-but-unpleasant side effects. And the potential benefits are relatively small, and relatively unlikely; it’s easy for them to be swamped by all these downsides.

But now we’re talking about the interaction of hundreds of numbers that are both small and uncertain. We can’t get away with ignoring the risks, but we can’t realistically quantify them either. All we can do is make some half-assed guesses, and our conclusions will change a lot depending on exactly which guesses we make. So we can’t do a useful Inside View calculation at all. Instead we’re basically forced to rely on the Outside View argument: taking twenty pills every day that probably don’t even work seems kinda dumb.

But then why take ivermectin specifically, rather than Vitamin D or curcumin or some other possible treatment? I dunno. You’re buying a long-shot lottery ticket. Pick your favorite number and hope it pays out.

The Takeaway

A back-of-the-envelope cost-benefit analysis tells us that taking ivermectin for covid might have positive expected value. If we follow that logic to its conclusion, we wind up taking twenty different supplements and this seems like it can’t be wise.

A blinkered view of rationality tells us to ignore our intuition and follow the math. A more expansive view realizes that if the numbers we’re plugging into our cost-benefit analysis are shakier than that intuition, then we should take the intuition seriously. Cost-benefit analyses and other “mathematically rational” are only as good as the numbers and arguments that we bring to them.

But even with shaky numbers, we can learn things from comparing our intuitions with the result of our calculations. Figuring out why we get two different answers can teach us a lot about our reasoning, and help us figure out where we went wrong. Taking the full Insanity Wolf cocktail really seems qualitatively different from picking your favorite long-shot drug, but the way we set up our math hid that from us.

Finally: please get vaccinated, and get your booster shot. And if you have a choice between Paxlovid and ivermectin, you should probably take the Paxlovid.

Questions about cost-benefit analysis, or where the math breaks down? Do you know something I missed? Tweet me @ProfJayDaigle or leave a comment below.

-

I originally misread the CDC page and interpreted the weekly average of daily numbers as weekly numbers. I’ve edited the piece throughout to reflect the true numbers, but it doesn’t change any of the conclusions, since the same error happened to every rate I discussed in the piece. ↵Return to Post

-

There would also be benefits from fewer people being hospitalized, fewer people suffering long-term health consequences, fewer people being miserable and bedridden for a week, etc. I’m going to talk about deaths pretty exclusively because it’s easier to talk about just one number. ↵Return to Post

-

This is very different from claims that ivermectin is a miracle cure, and we should take that instead of getting vaccinated. Ivermectin is at best mildly beneficial; vaccines are safe and effective and you should get a booster shot if you haven’t already. We’re talking about whether the small possibility of a minor benefit from ivermectin makes it worth taking. ↵Return to Post

-

These numbers are reported a little weirdly. Looking at the study, it seems like Paxlovid cut hospitalizations by 85%, from 41/612 to 6/607; it cut deaths by 100% from 10/612 to 0/607. I think the 90% figure is the extent to which it cut (hospitalizations plus deaths), since that math checks out, but that’s a slightly weird metric to judge by. ↵Return to Post

-

There are systematic ways of estimating this, but they would all require numbers for “how inflated do you expect non-significant effect sizes in published studies to be?” If you spend a lot of time with the medical literature you might have a number to put here; I don’t. ↵Return to Post

-

David Chapman calls this meta-rational reasoning. I see where he’s coming from but think that’s an unnecessarily complex and provocative way of talking about it. ↵Return to Post

-

Again, "Ivermectin is a miracle cure, take that instead of getting vaccinated" is, in fact, a completely and totally nonsense position. And many public "ivermectin advocates" are saying that, and they are wrong. But that’s not what we’re talking about here. ↵Return to Post

Tags: math models bayesianism philosophy of science replication crisis

Support my writing at Ko-Fi

Support my writing at Ko-Fi